It is not easy to settle the debate for DPDK vs SR-IOV-the technologies used to optimize packet processing in NFV servers.

For one, you will find supporters on both sides with their claims and arguments.

However although both are used to increase the packet processing performance in servers, the decision on which one is better comes down to design rather than the technologies themselves.

So a wrong decision on DPDK vs SR-IOV can really impact the throughput performance as you will see towards the conclusion of the article.

To understand why design matters, it is a must to understand the technologies, starting from how Linux processes packets.

In particular, this article attempts to answer the following questions!

- What is DPDK

- What is SR-IOV

- How DPDK is different than SR-IOV

- What are the right use cases for both and how to position them properly?

- How DPDK/SR-IOV affects throughput performance.

I recommend that you start from the beginning until the end in order to understand the conclusion in a better way.

What is DPDK?

DPDK stands for Data Plane Development Kit.

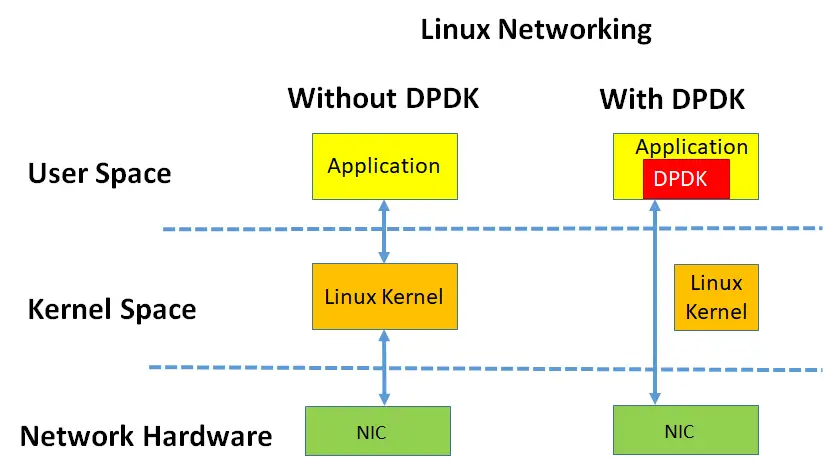

In order to understand DPDK , we should know how Linux handles the networking part

By default Linux uses kernel to process packets, this puts pressure on kernel to process packets faster as the NICs (Network Interface Card) speeds are increasing at fast.

There have been many techniques to bypass kernel to achieve packet efficiency. This involves processing packets in the userspace instead of kernel space. DPDK is one such technology.

User space versus kernel space in Linux?

Kernel space is where the kernel (i.e., the core of the operating system) runs and provides its services. It sets things up so separate user processes see and manipulate only their own memory space.

User space is that portion of system memory in which user processes run . Kernel space can be accessed by user processes only through the use of system calls.

Let’s see how Linux networking uses kernel space:

For normal packet processing, packets from NIC are pushed to Linux kernel before reaching the application.

However, the introduction of DPDK (Data Plane Developer Kit), changes the landscape, as the application can talk directly to the NIC completely bypassing the Linux kernel.

Indeed fast switching, isn’t it?

Without DPDK, packet processing is through the kernel network stack which is interrupt-driven. Each time NIC receives incoming packets, there is a kernel interrupt to process the packets and a context switch from kernel space to user space. This creates delay.

With the DPDK, there is no need for interrupts, as the processing happens in user space using Poll mode drivers. These poll mode drivers can poll data directly from NIC, thus provide fast switching by completely bypassing kernel space. This improves the throughput rate of data.

DPDK with OVS

Now after we know the basics of how Linux networking stack works and what is the role of DPDK, we turn our attention on how OVS (Open vSwitch ) works with and without DPDK.

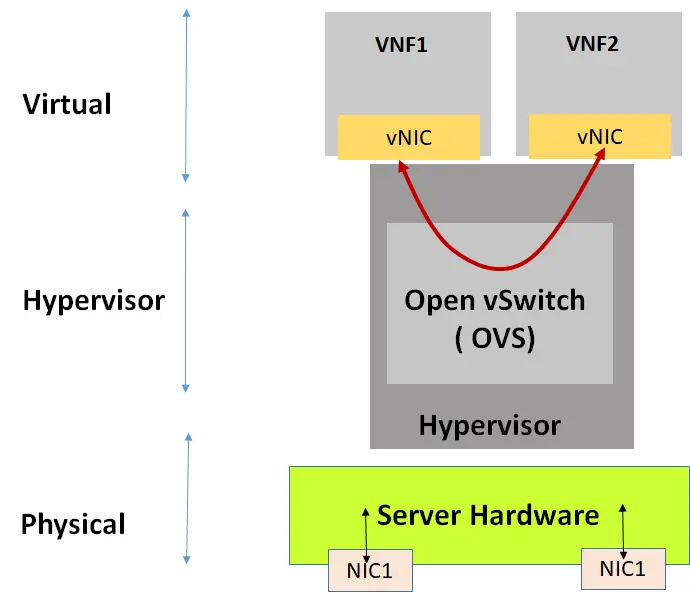

What is OVS (Open vSwitch)?

Open vSwitch is a production quality, multilayer virtual switch licensed under the open source Apache 2.0 license. This runs as software in hypervisor and enables virtual networking of Virtual Machines.

Main components include:

Forwarding path: Datapath/Forwarding path is the main packet forwarding module of OVS, implemented in kernel space for high performance

Vswitchid is the main Open vSwitch userspace program

An OVS is shown as part of the VNF implementation. OVS sits in the hypervisor. Traffic can easily transfer from one VNF to another VNF through the OVS as shown

In fact, OVS was never designed to work in the telco workloads of NFV. The traditional web applications are not throughput intensive and OVS can get away with it.

Now let’s try to dig deeper into how OVS processes traffic.

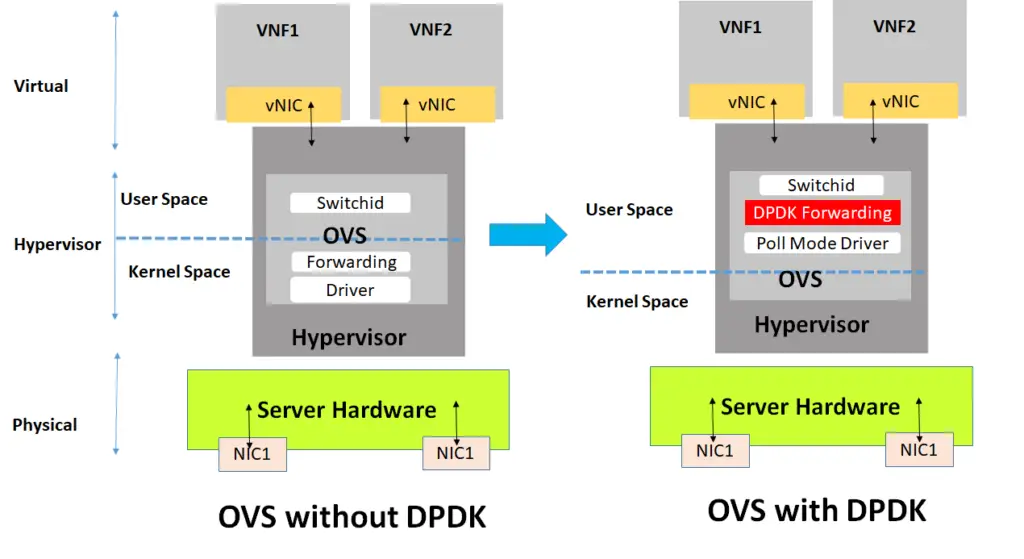

OVS, no matter how good it is, faces the same problem as the Linux networking stack discussed earlier. The forwarding plane of OVS is part of the kernel as shown below, therefore a potential bottleneck as the throughput speed increases.

Open vSwitch can be combined with DPDK for better performance, resulting in a DPDK-accelerated OVS (OVS+DPDK). The goal is to replace the standard OVS kernel forwarding path with a DPDK-based forwarding path, creating a user-space vSwitch on the host, which uses DPDK internally for its packet forwarding. This increases the performance of OVS switch as it is entirely running in user space as shown below.

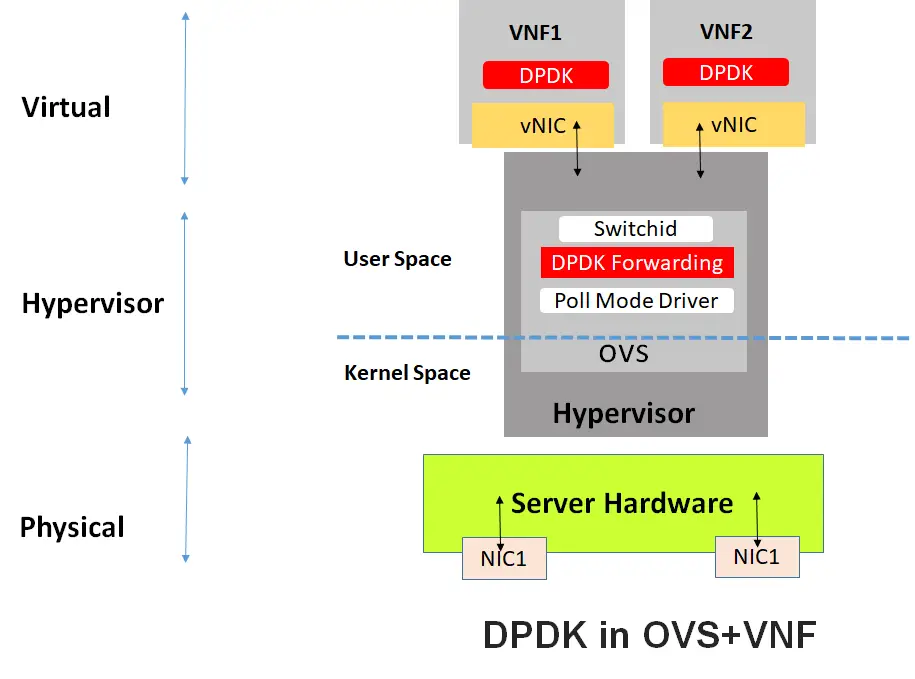

DPDK ( OVS + VNF)

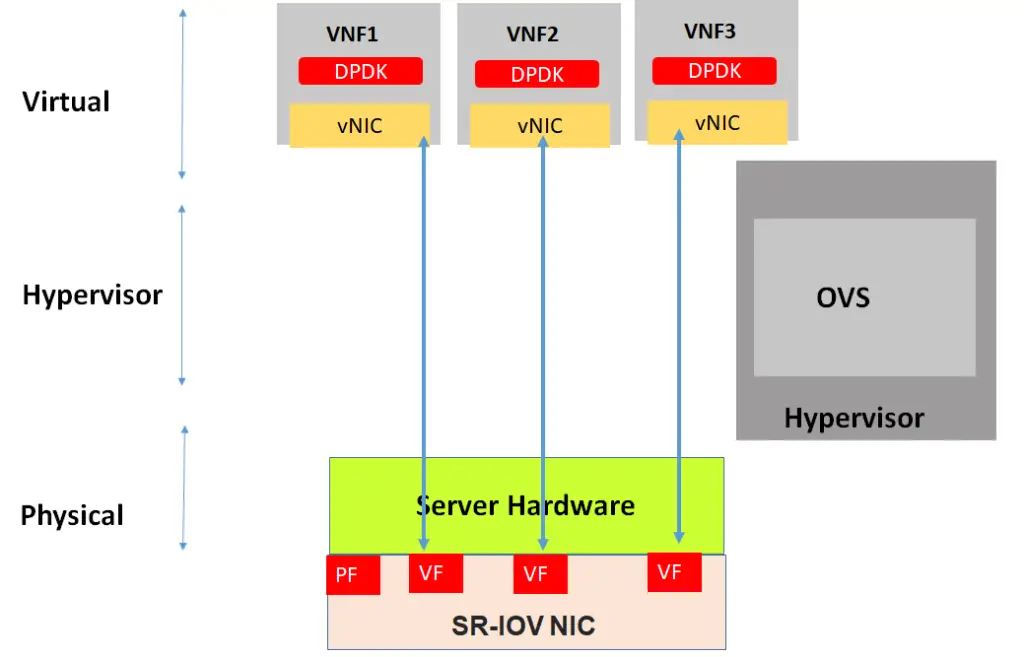

It is also possible to run DPDK in VNF instead of OVS. Here the application is taking advantage of DPDK, instead of standard Linux networking stack as described in the first section.

While this implementation can be combined with DPDK in OVS but this is another level of optimization. However, both are not dependent on one another and one can be implemented without the other.

SR-IOV

SR-IOV stands for “Single Root I/O Virtualization”. This takes the performance of the compute hardware to the next level.

The trick here is to avoid hypervisor altogether and have VNF access NIC directly, thus enabling almost line throughput.

But to understand this concept properly, let’s introduce an intermediate step, where hypervisor pass- through is possible even without using SR-IOV.

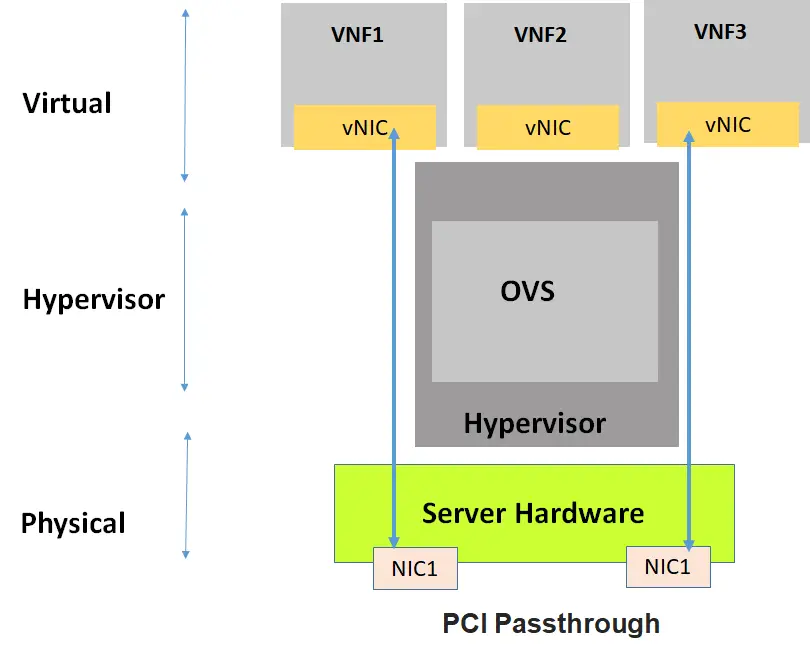

This is called PCI pass through. It is possible to present a complete NIC to the guest OS without using a hypervisor. The VM thinks that it is directly connected to NIC. As shown here there are two NIC cards and two of the VNFs, each has exclusive access to one of the NIC cards.

However the downside: As the two NICs below are occupied exclusively by the VNF1 and VNF3. And there is no third dedicated NIC, the VNF2 below is left without any access.

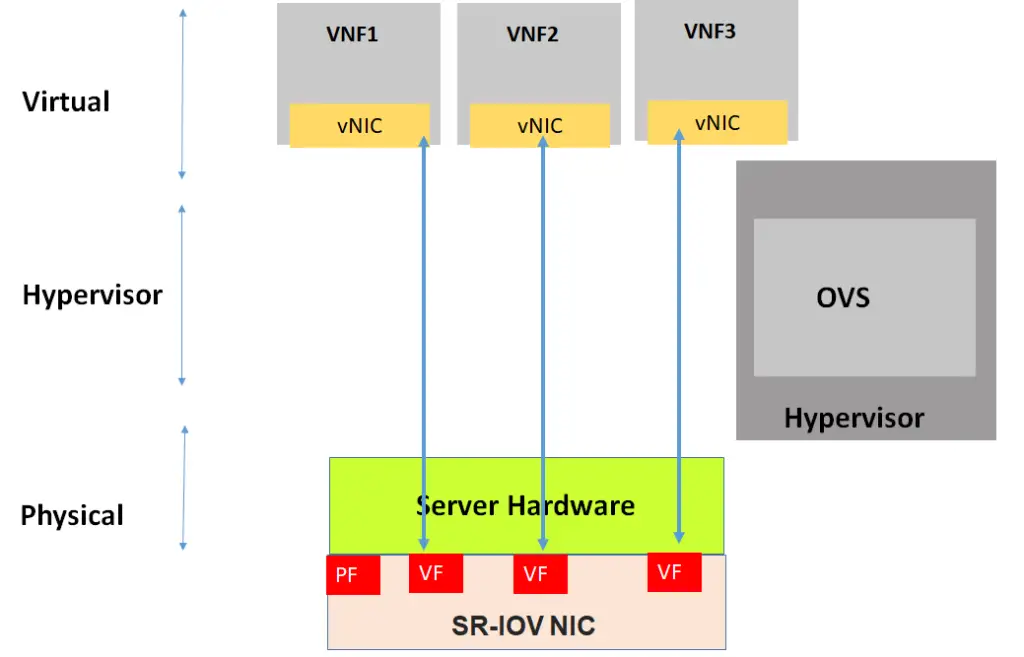

SR-IOV solves exactly this issue:

The SR-IOV specification defines a standardized mechanism to virtualize PCIe devices. This mechanism can virtualize a single PCIe Ethernet controller to appear as multiple PCIe devices.

By creating virtual slices of PCIe devices, each virtual slice can be assigned to a single VM/VNF thereby eliminating the issue that happened because of limited NICs

Multiple Virtual Functions ( VFs) are created on a shared NIC. These virtual slices are created and presented to the VNFs.

(The PF stands for Physical function, This is the physical function that supports SR-IOV)

This can be further coupled with DPDK as part of VNF, thus taking combined advantage of DPDK and SR-IOV.

When to use DPDK and/or SR-IOV

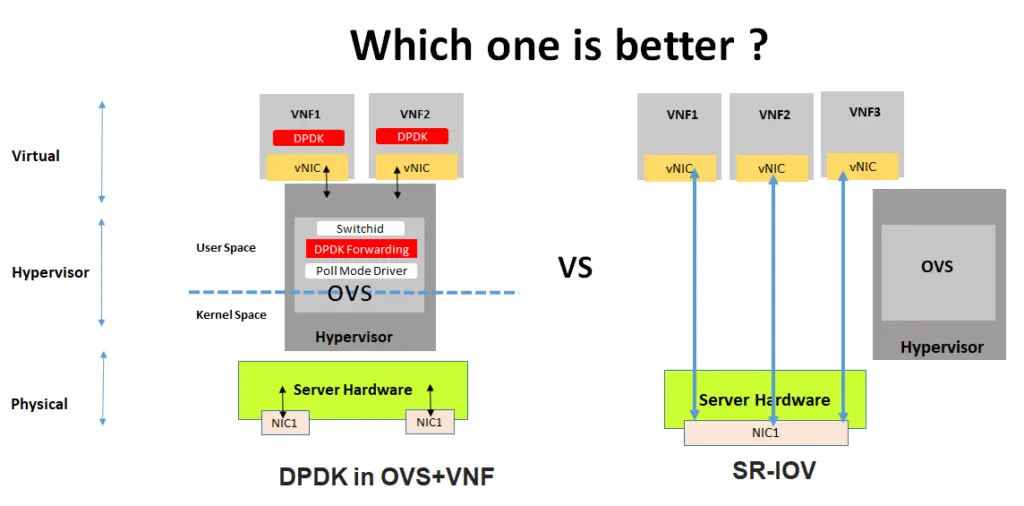

The earlier discussion shows two clear cases. One using a pure DPDK solution without SR-IOV and the other based on SR-IOV. ( while there could be a mix of two in which SR-IOV can be combined with DPDK) The earlier uses OVS and the later does not need OVS. For understanding the positioning of DPDK vs SR-IOV, we will use just these two cases.

On the face of it, it may appear that SR-IOV is a better solution as it uses hardware-based switching and not constrained by the OVS that is a purely software-based solution. However, this is not as simple as that.

To understand there positioning, we should understand what is East-West vs North-South traffic in Datacenters.

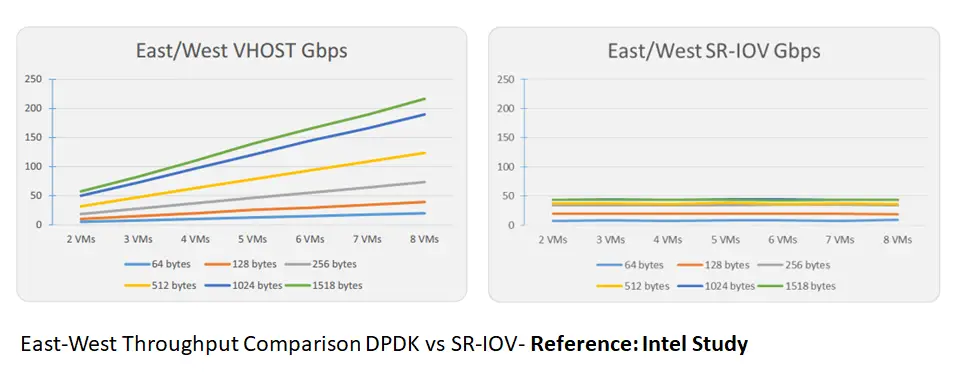

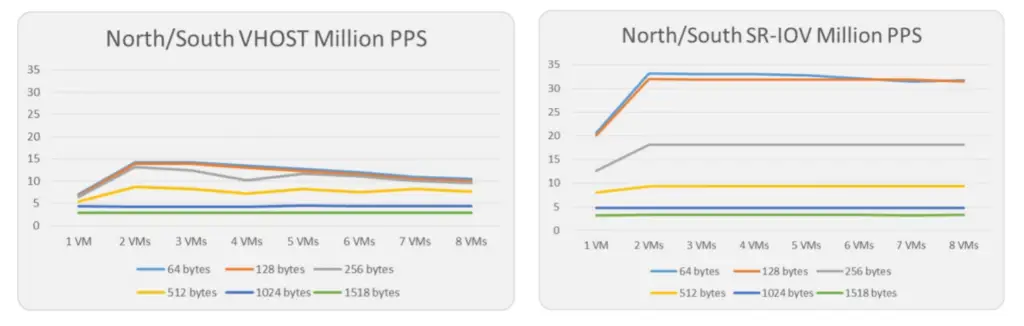

There is a good study done by intel on DPDK vs SR-IOV; they found out two different scenarios where one is better than the other.

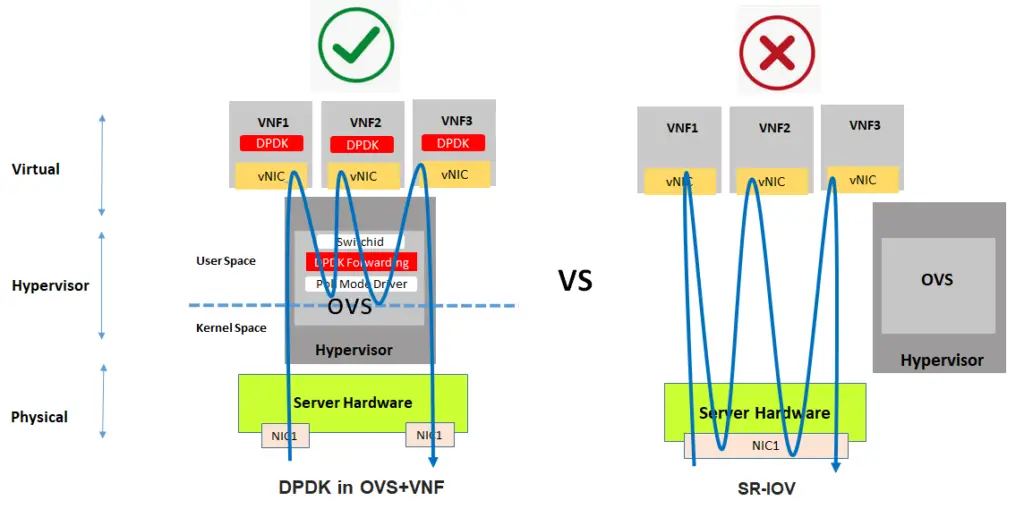

if Traffic is East-West, DPDK wins against SR-IOV

In a situation where the traffic is East-West within the same server ( and I repeat same server), DPDK wins against SR-IOV. The situation is shown in the diagram below.

This is clear from this test report of Intel study as shown below the throughput comparison

It is very simple to understand this: If traffic is routed/switched within the server and not going to the NIC. There is NO advantage of bringing SR-IOV. Rather SR-IOV can become a bottle neck ( Traffic path can become long and NIC resources utilized) so better to route the traffic within the server using DPDK.

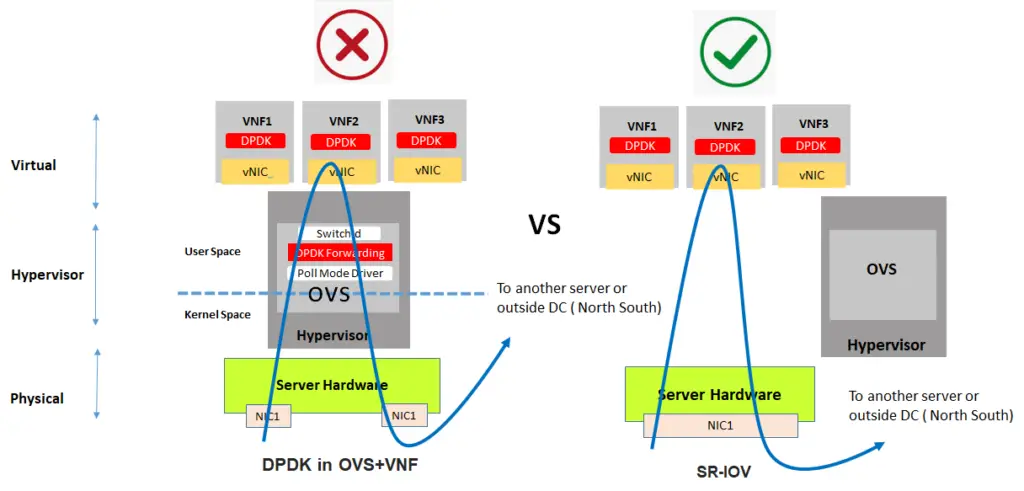

If traffic is North-South, SR-IOV wins against DPDK

In a scenario where traffic in North-South ( also including traffic that is East-West but from one server to another server ), SR-IOV wins against DPDK. The correct label for this scenario would be the traffic going from one server to another server.

The following report from the Intel test report clearly shows that SR-IOV throughput wins in such case

It is also easy to interpret this as the traffic has to pass through the NIC anyway so why involve DPDK based OVS and create more bottlenecks. SR-IOV is a much better solution here

Conclusion with an Example

So lets summarize DPDK vs SR-IOV discussion

I will make it very easy. If traffic is switched within a server ( VNFs are within the server), DPDK is better. If traffic is switched from one server to another server, SR-IOV performs better.

It is apparent thus that you should know your design and traffic flow. Making a wrong decision would definitely impact the performance in terms of low throughput as the graphs above show.

So let say you have a service chaining application for microservices within one server, DPDK is the solution for you. On the other hand, if you have a service chaining service, where applications reside on different servers, SR-IOV should be your selection. But don’t forget that you can always combine SR-IOV with DPDK in VNF ( not the DPDK in OVS case as explained above) to further optimize the SR-IOV based design.

What’s your opinion here. Leave a comment below?

Excellent Comparison & great insight of NFV performance challenges. Thanks.

Excellent write up. Thanks Faisal.

Can you also share few successful deployments

Thanks Saroj, I am afraid I dont have any public info about deployments

Thanks Aman ! Glad that you liked it

This is a concise explanation.. I have a question, is it possible to combine DPDK and SR-IOV in way that traffic between VNFs within a server use OVS+DPDK while traffic between a VNF and outside is routed via SR-IOV directly? In other words maybe there can be a process that decides when to use OVS+DPDK or SR-IOV (i.e. if you have a capable NIC that supports SR-IOV)

Interesting Comment Christopher !, I am not aware of this kind of implementation. Yet this would be interesting to have to get the best of both worlds

You can have both paravirtualization and SR-IOV interfaces in the VNF. You just need to route to the best interface depending your traffic is East-West or North-South.

Even better: the VNF can be a DPDK application: all types of interfaces are supported in DPDK, and there are some routing libraries.

Thanks Thomas for sharing your thoughts. Agreed.

That was brilliant articel Faisal. Thanks.

It appears to me that still there are a lot of details on NFV true implementation challenges that need to be discussed. Thank you for opening this topic.

Thanks Askar for stopping by to read my blog

I just know that there is an implementation of DPDK in VNF for optimizing the VNF in accessing the virtual network layer. Is this using the same DPDK kit or there is another DPDK for this purpose?

Thanks for commenting. Yes, this is the same DPDK used in VNF. But as the article shows that DPDK can be implemented in OVS as well as VNF…

A very good comparison and source of information.

Thanks David, Glad that you liked it

A very good read. Thanks for explaining it so nicely.

Thanks Harish, Glad that you liked it

Amazing explanation and presentation in simple way to understand easily

sir, if you may post something on below topics

1. L3VPN

2. L2Gw

3. VxLAN

4. overlay and underlay

5. GRE

all in respect of current use into virtual data centers.

in addition

SD WAN

and orchestration

Thanks Karun for stopping by to read my blog. I will consider your feedback for future blogs….

Nice comparison. Very helpful.

Request to provide more scenarios on OVS-DPDK, where you use it in real time.

Thank you Kranthi for your comments !

Thanks Kranthi, I am not sure if I got your question correctly..

Nice and simple explanation. Thanks for sharing 🙂

Thank you Priyesh for visiting and commenting

Thanks, very informative

Thanks a lot, Well described and explained.

Very very informative article!

Detailed and yet simple to understand.

A must read for beginners in networking enthusiast

Excellent explanation, Faisal. Thank you! Can you comment on the limitations of SW based DPDK and SRIOV in terms of performance and latency and how using an accelerator (FPGA) can improve both of those.

The best article I’ve ever seen about DPDK and SR-IOV. Thanks !

Hi Luciano, thank you…you made my day 🙂

Great Explanation.

Would it be possible to include VPP as well?

Thanks Kunal and for your inputs too

Thanks Faisal for educating others in a very simplified way!

Thanks Mohit for your feedback

Excellent explanation.You made my day .Need some more !!

and you made my day 🙂 …thanks Satyam

Thanks for great article!

I think workload mobility and implications of SR-IOV for such cases should be included in the overview.

Thanks for your feedback Jeff Tantsura

thanks for the article, very informative. for real NE in telco, it most likely will need to use SR-IOV for high throughput reason and surely it will sit on top of multeple hosts/phy servers (for redundancies and capacity), but it comes with the cost: it not easy to do “live” migration without interrupting the service and less flexible as it require special mapping to phy NIC (compared to OVS).

the logical choice will be limit SRIOV for telco “NE” and OVS for the rest (EMS, management etc.)

Agreed Budiharto

Never seen anyone explain it better. thank you so much

Thanks Sam, glad that you liked it

Another great article Faisal!!!

A unique blend of story telling combined with technology enlightment.

I am hooked to your articles.

Thank you so much Sandeep

Is there any relationship here with SDN enable network?

Salam Sorfaraz, the behaviour should be same irrespective of the use of SDN or not.

Really very useful and informative article with clear explanation and logical diagrams. Thanks

Thanks a lot Jayaprakash ! glad that it was of help to you

This is one of the simplest explanation of such a complex topic that I have seen till now. This article is like a treasure for me. I am thankful to you that you have published it to educate others.

Dear Ashwani, Thanks a lot. This is very encouraging comment for me….please spread the good and share the article to your circle…

Excellent review in a very simply and logical steps. Thank you for the great efforts

Thanks Akmal, Glad that you stopped by and liked this piece.

You have dealt with a complex topic in the best possible simple way. Really amazed by your capability to explain things in such a simple manner.

Thanks Arvind for stopping by to read the blog…

Very Informative Article , Thanks Faisal!

Thanks Eslam for stopping by to read this piece.

Excellent post Faisal. You really simplified it for me. My team is in the process of implementing SR-IOV vs DPDK for a Telco and this is the kind of information I needed to understand the concepts better.

I will probably come back in a few months with some practical view point that I can add from our field experience.

Wow that will be wonderful to get the practical viewpoint

how did it go?

Very very neatly explained Faisal. Good work !

Great to have you here Rakesh !

Good morning FAISAL KHAN,

This article very clear and easy to understand. Can I translate and add some more info this article to Vietnamese before share to my Colleages?

Original link will be keep at heading of my article.

Thanks you!

thanks duy, answered through mail

sir, for me vnf and nfv is quite confusing and hardly can differentiate these two, can you please add some detail to differential both

This post does open my mind. But it is so good if we can show steps to be practical. To see and trust.

Thanks Thuan, can you elaborate a little bit

Excellent Article !!!

Thanks Neetesh

Really, explained in very easy format.

really helpful

Sandeep, thanks for visiting and liking it.

good report!!

In “If traffic is North-South, SR-IOV wins against DPDK”, who dicide the route to VNF2?

To controll the route to VNF1,3, OVS-like Switch is nessesary. Outer switch?

Sorry Simiter, not able to get you !

Really very well explained. good article.

Thanks Sandeep, good that you liked it

great explination ..excellent 🙂

Many thanks…

Thanks Ajay for stopping by to read and liking it.

Faisal, indeed a great way and simplified one to explain the complex topics together.

Zahid, great to know that you liked it

“If You Can’t Explain it Simply, You Don’t Understand it Yourself”

Excellent Description. Thanks,

Thanks a lot Jessica

Really explained very well and any one can understand it. Keep posting more and more pls. to educate rest of the world. Rarely seen such detailed write-ups.

Thanks Latha for taking to read and commenting.Glad that you liked it

Excellent Sir !!

please share the DPDK and SR-IOV commands for regular operations and debug ?

Thanks Naga ! I am not sure, can you clarify further

simple and superb, as i am fresher to this technology still

grasped the essence!!

Thanks a Lot!!

Thank you so much Sunil, you made my day 🙂

nicely explained.

Thanks Simon

Hi,

Brilliant explanation! Its simple and precise.

As you explained in DPDK, kernel is bypassed and userspace polls for the packets. Are you aware of Enhanced Network Stack of VMware? It is a DPDK based stack in which the kernel itself does the polling.

Do you think it will be a better solution for telcos? Better than simple DPDK or SRIOV?

Hi Anand, thanks for commenting. I am not aware of VMWare stack, I will have to check it up.

Thanks Faisal for making the Complex topic so Simple. Kudos to you.

How about next blog on VPP ?

Thanks.

Sounds like a good idea ! Thanks Jatin for suggesting.

appreciate this topic explanation.

indeed very helpful.

Thanks Syed asfar, for taking time to read and liking it.

Thanks, Faisal for this excellent write-up. This was really helpful.

Thanks Saif, Glad that you liked it

can you please correct the typo “DPDK stands for Data Plan Development Kit”.

it is awesome to see how simplified your contents are even though the topic are complex .thumbs up !!

Good feedback Ajay, this post guests hundreds of visitors every day, you are the first one to point it out.

Thanks Faisal, So easy to understand your write up on complex technologies

Thanks Amarinder

Very nice explanation .. Awesome Job Faisal

Thanks Saurabh, Glad that you liked it

very very good article , but some pic can not be found , could you please to fix it ? many thanks

Thanks, keling, Can you clarify, as everything is visible at my end. which browser you are using, can you change it.

Wow…Amazing explanation… right on target…So difficulty subject but you made it so easy…well done

Glad that you liked it Chandra, keep visiting back !

Very well explained Faisal, thank you!

I have a little doubt, however. As you explained, with SR-IOV the VM can already talk directly to the server NIC (using a VF). What does DPDK provide for in a SR-IOV+DPDK scenario?

Cheers!

Hi Eduardo, thanks for your question. Some applications are customized for DPDK ( does not necessarily mean they have to use OVS for switching ), they can still be used with SR-IOV, thus taking advantage of SR-IOV fast switching.

great work!! excellent

Thanks Rahul, its nice to see that you liked it.

Awesome for any new learner . Basic to deep explanation. Very simple way feeding full meal.

Hello Ranjeet, glad that you liked it.

Wonderful comparison , thanks for knowledge sharing

Thanks Ishrat, Glad that you liked it.

Hi,

Very nice explanation thanks.

I see in the DPDK diagram, OVS is included in your explanation but not in SR-IOV.

Is the OVS required for switching traffic? How do you explain that?

Thanks

you are correct Ashok, not needed in case of SR-IOV, but needed for DPDK for switching traffic.

Can that be corrected from the diagram now? That adds more clarity.

Thanks

Sorry, Ashok , did not get you. Any issue with the diagram ?

Hi,

Thanks for this lucid yet informative blog post!

Can you please elaborate my below points:

You wrote that

“East-West” traffic refers to traffic within a data center — i.e. server to server traffic.

Also it is better to use OVS-DPDK for east-west traffic.

However while you described about North-South traffic (heading : “If traffic is North-South, SR-IOV wins against DPDK”), you mentioned in the picture to use SR-IOV for within server or outside DC?

Doesn’t it conflict the previous statements?

@Faizal. Thanks for this detailed write-up

@subhajit. The scenario defined to compare both technologies are subject to a condition where VM1 and VM2 reside in the same compute/hypervisor/host.

East-West also includes scenario where VM1 and VM2 are on separate compute/hypervisor/host as shown above North-South diagram. This is by far the most common kind of East-West communication that I have seen in the multiple on-premise cloud sites I have worked on. So SR-IOV is preferable.

But again, it all depends on your VNF network architecture.

Thanks Ravi ! for your inputs here

Hello FAISAL KHAN,

Thank you so much for the neat explanation. Any one can understand on how SR-IOV and DPDK works.

One question : Does DPDK work well on hybrid setup like baremetal and VM combinations located in a same data center ? or its a wise idea to go with SRIOV in this case.

Thanks again,

Hem

Hello Hem, I am not sure if I understood your question well. Can you clarify.

Very well explained thanks

Thanks Saroj

It was nicely summed up. Thanks for the insights.

Would it be possible to share some insights on the RAM and CPU utilization comparison for OVS-DPDK and SRIOV based Networks?

Also, how do we troubleshoot SRIOV networking between the VM and the Physical NICs?

Hi Karthik,

I am afraid these questions are beyond the scope of this article and I am not familiar with them

Using SRIOV networking means physical server release the adapter (from host kernel space) and is assigned to VM kernel space (you can validate this by yourself running a simple test looking inside /proc/net to watch how network card disappear from you physical server and comeback after vm stop).

in summary, troubleshooting since vm is quite similar you would do from physical server.

Hi, I have a question. Im still confused about where DPDK should be installed. As far as I know, DPDK only installed on host server and then combined with OVS. But, in the above picture, there is DPDK installed inside VNF. So eaxh time we create VNF, we should install DPDK?

And VNF in here is VM/Instance right?

DPDK is installed on server for sure and that is the default way. But some VNFs are optimized for DPDK stack. Yes VNF is a virtual machine.

Good Articulation and clear explaination.

Good Work Faisal.

Thanks Atul, Glad you liked it !

Thank you for sharing this article. Can I translate this article into Korean?

Hi Changsu lm, I know you already translated to korean. Thanks a lot

Hi

it is a very good article.

I think it will be better if you add VIRTIO to the same topic, it is also an important part of the IO in virtualization.

Hi Omar, that sounds like a good idea !

Hi Faisal,

Thanks for the detailed analysis and explanation.

I have a question, are there any other methods apart from SR-IOV and DPDK to optimize packet processing in Virtualized environments ? If yes could please share some insights on those.

Hi Sai, yes there are , as Omar mentioned Virtio is one of them !

Using SRIOV networking means physical server release the adapter (from host kernel space) and is assigned to VM kernel space (you can validate this by yourself running a simple test looking inside /proc/net to watch how network card disappear from you physical server and comeback after vm stop).

in summary, troubleshooting since vm is quite similar you would do from physical server.

Thank you very much Faisal for a detailed description with diagram.

Thanks Deepak

Hi Faisal,

Very difficult topic explained in very simple way. Thanks!!

I have below queries. If you have time could you please clarify these?

1) In case of SR-IOV, VFs traffic does not pass through hypervisor kernel? Does this mean that VFs are pass through to VNFs, similar to PCI-Passthrough but of course virtual?

2) Isn’t SR-IOV slower than PCI-Passthrough as network Bandwidth will be split into multiple vNICS?VFs?

3) When we say we are only using DPDK (without OVS), does this mean DPDK + Linux bridge?

Regards,

Uday

Sorry Uday for getting back late on this one, as I missed the comment altogether.

1. Yes. But do take a note that SR-IOV can also use PF, see for reference this article

https://docs.microsoft.com/en-us/windows-hardware/drivers/network/sr-iov-architecture

2. Need to check. But apparently, your understanding seems correct.

3.DPDK enhancements in VNF, not the DPDK which runs with the OVS

When you mention VFs and switching between them, please mention them as per port. Not across NIC. You can switch between VFs of same port ?

Hi Ram, Thanks for the useful comment. Can you please clarify your question further. Sorry for being late on the response, as I missed the email altogether.

Hi Ram, Thanks for the useful comment. Can you please clarify your question further. Sorry for being late on the response, as I missed the email altogather.

Faisal Khan, Very nicely explained the concepts of DPDK, OVS, OVS+DPDK, SR-IOV, PCI Pass through and corresponding usage. Thanks a lot for insightful article. Keep writing.

Thank you Anand !

Hi Faisal. I keep coming back to this article every now and then as we are dealing with interface performance issues in an OpenStack telco cloud deployment. I wouldn’t name the organization but I have made you famous and you have made me famous at my work place.

Not like some other articles claiming to be technical experts while doing jugglery with adjectives. Aptly put, clearly articulated, easy to understand for people not necessarily having infrastructure or linux background. Thanks a ton and hope you keep posting.

Hello Ahmed, thanks a lot ! I appreciate your love of spreading the article!

Hello Ahmed, thanks a lot ! I appreciate your love of spreading the article !

Hi Faisal,

To be honest now i only came to know about the basic difference b/w DPDK&SR-IOV after reading this article.

Before this it seems like , This is next to impossible to understand these fancy words.

You explained it in very fundamental way, Many thanks to you Sir!!

Love to read your articles (Always 👍👌)

Dear Fasel

Excellent article and nice explanation. Really appreciate the work.

Thanks Ravinder Sharma

This is the good comparison and explanation of SR-IOV and DPDK. would like to see more on SR-IOV flow and mechanism.

Thanks Dilshad for commenting and giving your feedback

Excellent and lucid explanation !!

Thank you so much.

Thanks Purvesh, for coming over and commenting !

Thanks for clarification.

Thanks a lot Eyyup!

Hi Faisal,

Thanks for the interesting article.

I think we can combine SR-IOV and OVS in one server, use case is some VNF needs OVS functionalities, whereas some needs SR-IOV.

As you know still live migration is a big headache in SR-IOV, so not to waste my compute host , can we have OVS and SRIOV in one compute ?

Best Regards,

Aman Gupta

Excellent – short and crisp writeup

Hi PKJ, Glad that you liked it.

Thank you for a great and easy comparison , it is very helpful.

That is good to know Daman

Excellent write-up, very clear, thanks a lot

Thanks for the feedback VIJAY !

I thought SRIOV is good for bandwidth demanding VNF’s and DPDK is good for transaction based applications/VNF’s. What are your thoughts on this.

Hi Praveen, yes SRIOV is good for bandwidth demanding VNFs, could you elaborate on the “transaction based VNFs”

Hi Faisal,

Thanks for the article. It is really good.

I’m commenting after 2 years from the post but I hope my comment can benefit the readers.

There is an important difference between DPDK and SRIOV which is the packet filtering capabilities (iptables or firewalling) which can be achieved in case of DPDK but cannot be achieved in case of SRIOV, because it bypass the kernel (which applies the filtering rules) by nature.

This is always a drawback in case of SRIOV in many cases.

This is very good feedback/comments. Thanks for sharing your views Mohammed !

perfect !!!!

Thank you Javed for coming to my blog and commenting

Excellent article, btw I will test them and post results asap.

Thanks Alexendre, I will be very interested to see the results.

Great Article Just read it Clear to the point and understandable

Thanks a lot Bhupinder Grewal

Excellent Article for who doesn’t know anything about SRIOV.

Thank you Janakiram

Good info.

Thanks a lot Faizal, I can’t stop my self to read this article. You are great teacher the way you explained the things.

Hey Anurag, thank you…sorry for being late. your comment made my day

Excellent

Thanks a lot Janhabi

Can u post video with practical & traces

Hi Faisal,

This is the most user-friendly intro to dpdk and sr-iov I’ve read so far. Thank you for writing it!

All the best.

Hey kjkent, sorry for being late and thank you for visiting my blog

Thank you kjkent , your comment made my day

Thanks for the great explanation

Thank you so much Ashok

Thanks for the great blog, clarified a lot. However, I have one question: When SRIOV is already bypassing complete hypervisor, what advantage is obtained by combining DPDK with SRIOV. As I understood, DPDK allows forwarding to be happened in User-space rather than Kernel space, but as SRIOV is already bypassing hypervisor, whats the point of user & kernel space. Can you please clarify?

Hey thanks, Please do read the last two paragraphs before the conclusion and let me know if still not clear.

Very Very Helpful and very informative….the Explanation was Very simple and clear.Awesome@

Thanks Faisal

thank you so much for this awesome explanation